Terminus Group's Space Intelligence Latest Research Accepted by Prestigious AAAI Conference

Terminus Group's Bit Lab Announces Breakthrough: DREAM Method Selected by AAAI International Conference

Recently, Terminus Group Bit Lab released its latest research achievement, introducing a novel method named DREAM, designed to address the long-standing issue of label scarcity in cross-modal retrieval between 2D images and 3D models. By decoupling discriminative learning and incorporating a bi-graph alignment mechanism, the method significantly improves the accuracy and efficiency of cross-modal retrieval, while enhancing the semantic understanding and correlation capabilities of spatial data.

The study, titled "DREAM: Decoupled Discriminative Learning with Bigraph-aware Alignment for Semi-Supervised 2D-3D Cross-modal Retrieval", has been officially accepted by AAAI (Association for the Advancement of Artificial Intelligence Conference on Artificial Intelligence)—a globally recognized and CCF Class A academic conference in the field of artificial intelligence.

Global Recognition: The Academic Weight of AAAI

Organized by the Association for the Advancement of Artificial Intelligence, AAAI is one of the oldest and most influential international conferences in AI. It is also listed as a Class A conference by the China Computer Federation (CCF). The inclusion of Terminus Group's work highlights the company's growing research strength in cross-modal learning, recognized at the highest levels of the global academic community.

Core Innovations of the DREAM Method

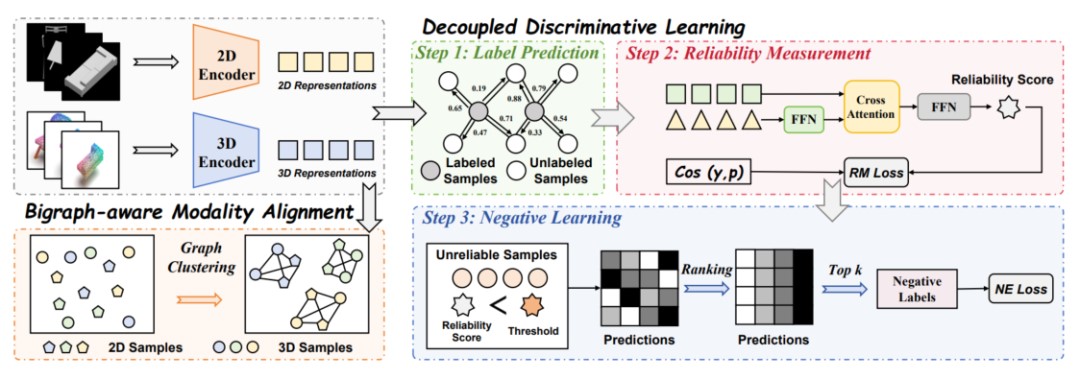

The DREAM method first employs independent encoders to extract multimodal representations. It then applies decoupled discriminative learning, calculating reliability scores for semantic learning and negative learning separately to mitigate bias caused by overconfident models.

Innovatively, the research team introduced a bi-graph mechanism, which maintains discriminative power while aligning semantics across different modalities, effectively bridging heterogeneous gaps.

This design allows DREAM to efficiently utilize limited labeled samples while fully leveraging the hidden value of unlabeled data, greatly improving the generalization performance of cross-modal retrieval.

Broad Real-World Application Prospects

DREAM demonstrates strong potential in semi-supervised 2D-3D cross-modal retrieval, particularly in scenarios where labeling is costly and data is complex:

Smart Manufacturing and Industrial Inspection: From just a few 2D drawings or photos, the system can quickly retrieve defective samples or match design prototypes from massive 3D point cloud datasets, reducing manual labeling costs.

AR/VR Content Ecosystems: Users can upload a simple 2D sketch and rapidly locate corresponding models in an unlabeled 3D repository—or vice versa—accelerating virtual environment creation.

Security and Smart Cities: Leveraging drone or surveillance footage, DREAM can identify targets within large-scale unlabeled 3D city point clouds or architectural models, improving emergency response and urban management efficiency.

Research Value and Future Outlook

The paper emphasizes that with the explosive growth of data, 2D-3D cross-modal retrieval has become a critical direction in AI research. However, challenges remain due to label scarcity and modality differences. DREAM tackles these problems through its decoupled "label prediction–reliability measurement" strategy and its bi-graph alignment mechanism.

Experimental results on multiple authoritative benchmark datasets show that DREAM significantly outperforms state-of-the-art methods, representing a breakthrough in cross-modal learning. Looking ahead, the team will further explore the deployment of DREAM in applications such as smart cities, intelligent manufacturing, and digital twins, accelerating the transition of AI from academic research to industrial practice.