Multiple Terminus Group Space Intelligence Papers Accepted at ICCV 2025

Four Papers from the Team Led by Dr. Ling Shao, Chief Scientist of Terminus, Selected for ICCV 2025

Recently, the International Conference on Computer Vision (ICCV)—one of the world's premier academic events in computer vision—announced its paper acceptance results for 2025. Dr. Ling Shao, Chief Scientist and International President of Terminus, together with his collaborators, had four papers accepted, covering research directions such as spatial intelligence, 3D reconstruction, and medical AI. This achievement once again demonstrates the team's continuous breakthroughs and strong capabilities in cutting-edge artificial intelligence research.

ICCV 2025: A Global Premier Academic Stage

As one of the "Big Three" computer vision conferences alongside CVPR and ECCV, ICCV is held every two years, bringing together world-leading scholars, researchers, and industry experts. The 2025 edition will take place in Hawaii, USA, from October 19–25.

According to official statistics, ICCV 2025 received 11,239 submissions, nearly triple the number in 2019. A total of 2,699 papers were accepted, resulting in an acceptance rate of just 24%, down by about two percentage points from 2023—highlighting the increasingly fierce competition.

Against this backdrop, the success of Dr. Shao's team in having four papers accepted underscores their international leadership in computer vision and spatial intelligence.

Accepted Papers

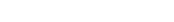

Towards Scalable Spatial Intelligence via 2D-to-3D Data Lifting

This study tackles the long-standing bottleneck of limited 3D training data by proposing an efficient pipeline to lift single-view 2D images into realistic 3D representations. The team also released two large-scale generated datasets—COCO-3D and Objects365-v2-3D—to advance 3D perception, multimodal reasoning, and spatial intelligence applications.

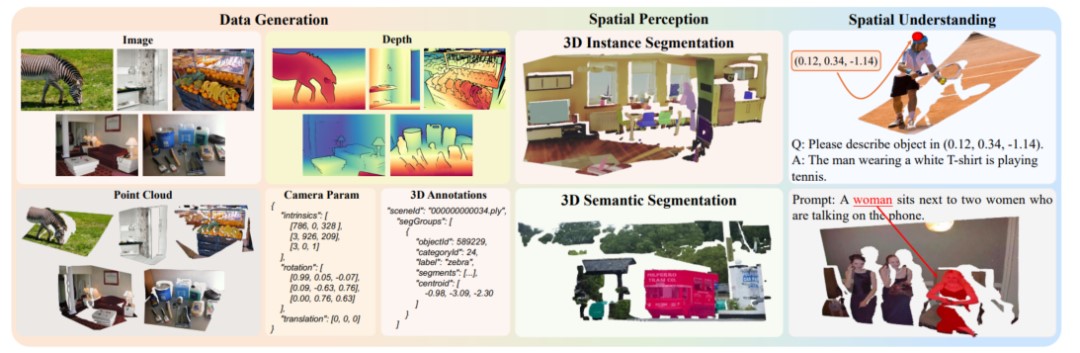

Spatial Preference Rewarding for MLLMs' Spatial Understanding

Addressing the shortcomings of multimodal large language models (MLLMs) in fine-grained spatial reasoning, this paper introduces the Spatial Preference Rewarding (SPR) framework. By jointly optimizing semantic quality and localization accuracy, the method significantly enhances vision-language alignment with minimal training cost, achieving breakthrough performance on multiple benchmarks.

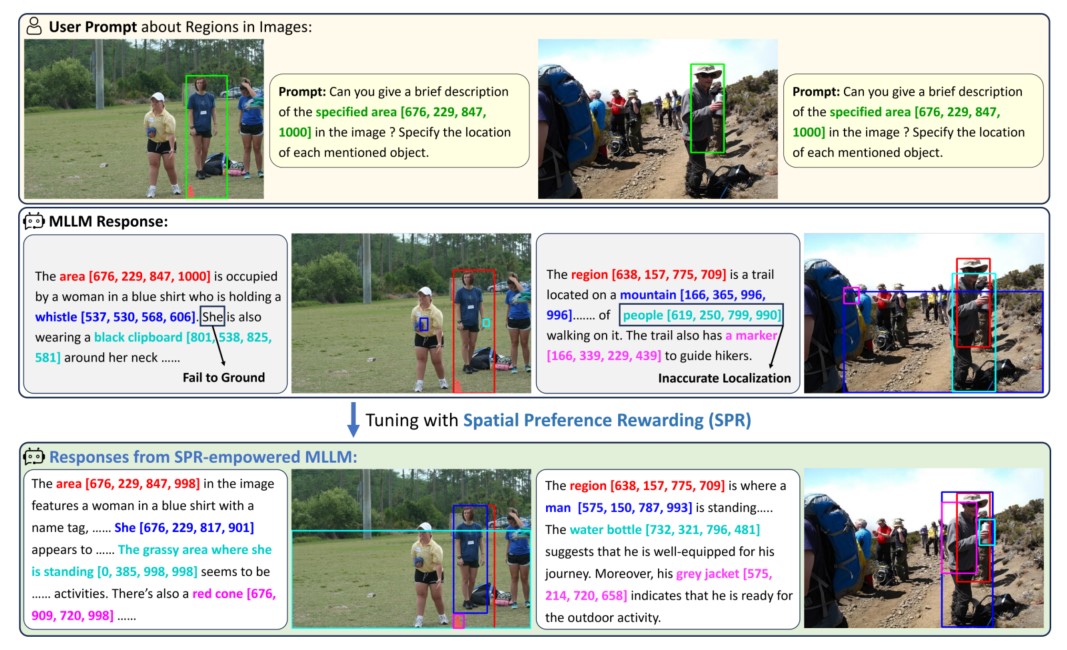

PCR-GS: COLMAP-Free 3D Gaussian Splatting via Pose Co-Regularizations

Breaking free from reliance on COLMAP for 3D reconstruction, the proposed PCR-GS technology achieves robust 3D modeling without explicit pose estimation by aligning cross-view semantics and jointly optimizing camera poses. Experiments validate its superior performance in dynamic and complex scenarios, marking a major step toward the practical application of 3D Gaussian Splatting.

Scaling Tumor Segmentation: Best Lessons from Real and Synthetic Data

This study highlights the tremendous potential of synthetic data in medical AI, showing that it can reduce annotation requirements and improve model performance. The team introduced CancerVerse, the largest voxel-level tumor dataset across six organs to date. Results show segmentation improvements of up to 16% on out-of-distribution tasks and 7% on in-distribution tasks, setting a new benchmark for scalable medical AI.

From Academic Breakthroughs to Real-World Applications

Dr. Shao's team not only addresses key challenges in AI—spanning spatial intelligence, cross-modal understanding, and medical applications—but also provides a solid foundation for industry adoption. From 3D data generation to large model optimization, and from cross-modal reasoning to healthcare deployment, these results highlight Terminus Group's forward-looking vision and leadership in driving the deep integration of artificial intelligence with the real world.